Designing for AI: Human-centred GPTs and practical principles

Exploring how design can make generative AI genuinely useful, ethical, and human.

When generative AI went mainstream, I wanted to understand how design could make it genuinely useful to people and not just powerful on paper.

I built working GPTs and lightweight agents that addressed real problems.

Each prototype explored a different question:

How can AI plan more sustainable meals?

How can AI coach with empathy?

How can AI screen CVs fairly and consistently?

How can AI deliver principle-based content reviews at scale?

Together, these experiments helped me define a set of practical design principles for AI that works with people.

Content Design Coach

A conversational reviewer based on the “2i” peer review model.

The problem

There were two issues I wanted to address.

First, people who write content often need structured, principle-based feedback that improves their work without replacing human judgement.

Second, I noticed a worrying shift: many people started to use AI to write instead of them.

It made their messages longer, vaguer, and less personal, and it weakened their ability to communicate clearly.

The real design challenge was to create a tool that strengthens a person’s writing ability rather than outsourcing it.

The design

The GPT reviews text using six principles: clarity, accuracy, context, transparency, cohesiveness, and inclusivity.

For each principle, it:

explains what the principle means

highlights where the text does or doesn’t meet it

suggests how to improve

and guides the user to make the change themselves

It always ends with a reminder to validate content with real audiences, not the model.

Principles

Feedback as dialogue, not critique

Explain-before-correct to build understanding

Tone that encourages learning rather than outsourcing

What this could enable

The model helps scale thoughtful, principle-based content review while actively improving people’s writing skills.

Easy Hire

A GPT that supports recruiters in making faster and fairer hiring decisions.

The problem

Hiring teams struggle with volume, inconsistency, and the invisible creep of bias. Job descriptions vary wildly, and evaluators apply different mental models, not always aligned to hiring managers.

The design

Easy Hire guides users through three structured conversational workflows:

Writing inclusive job descriptions

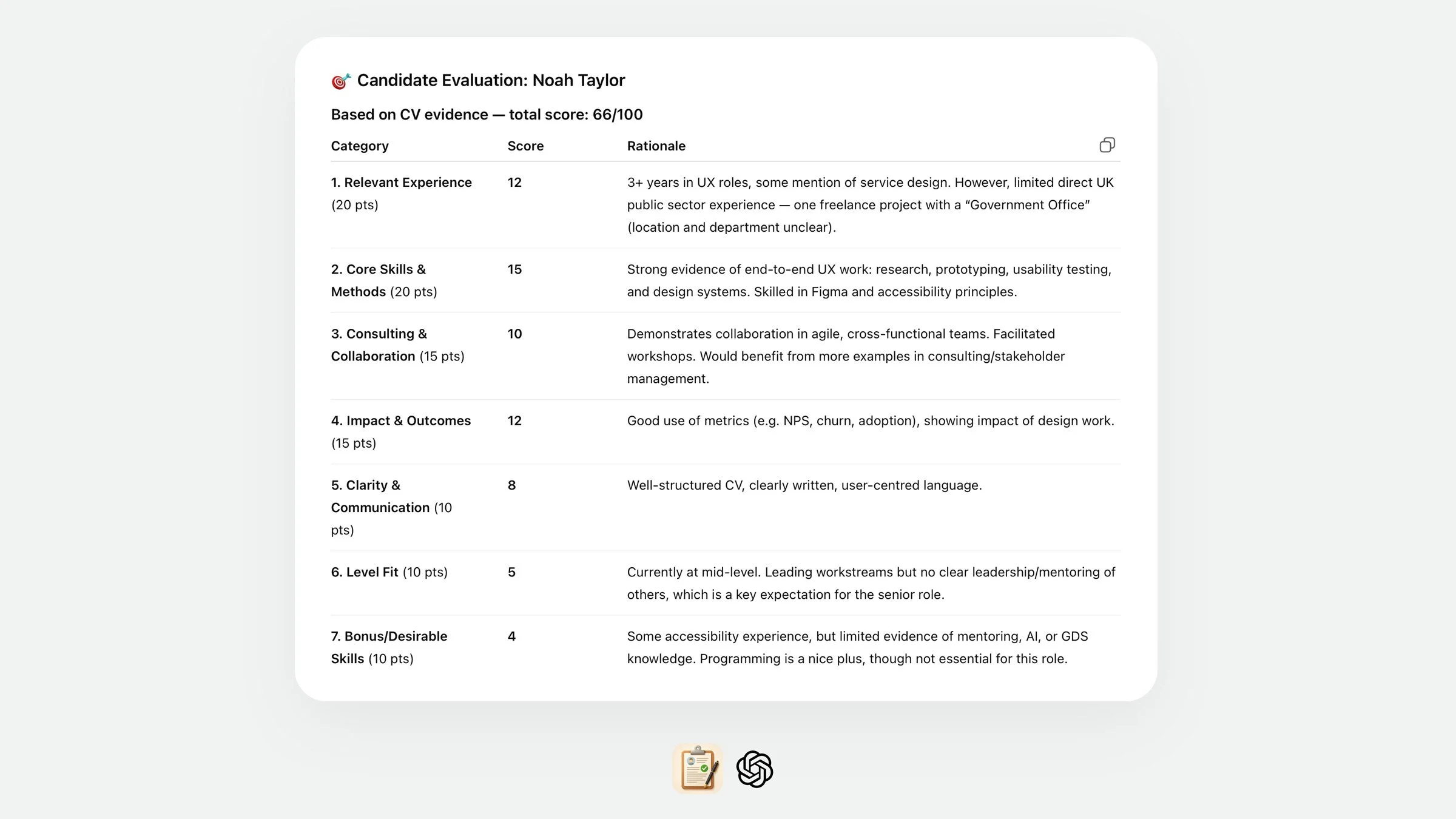

Evaluating individual CVs

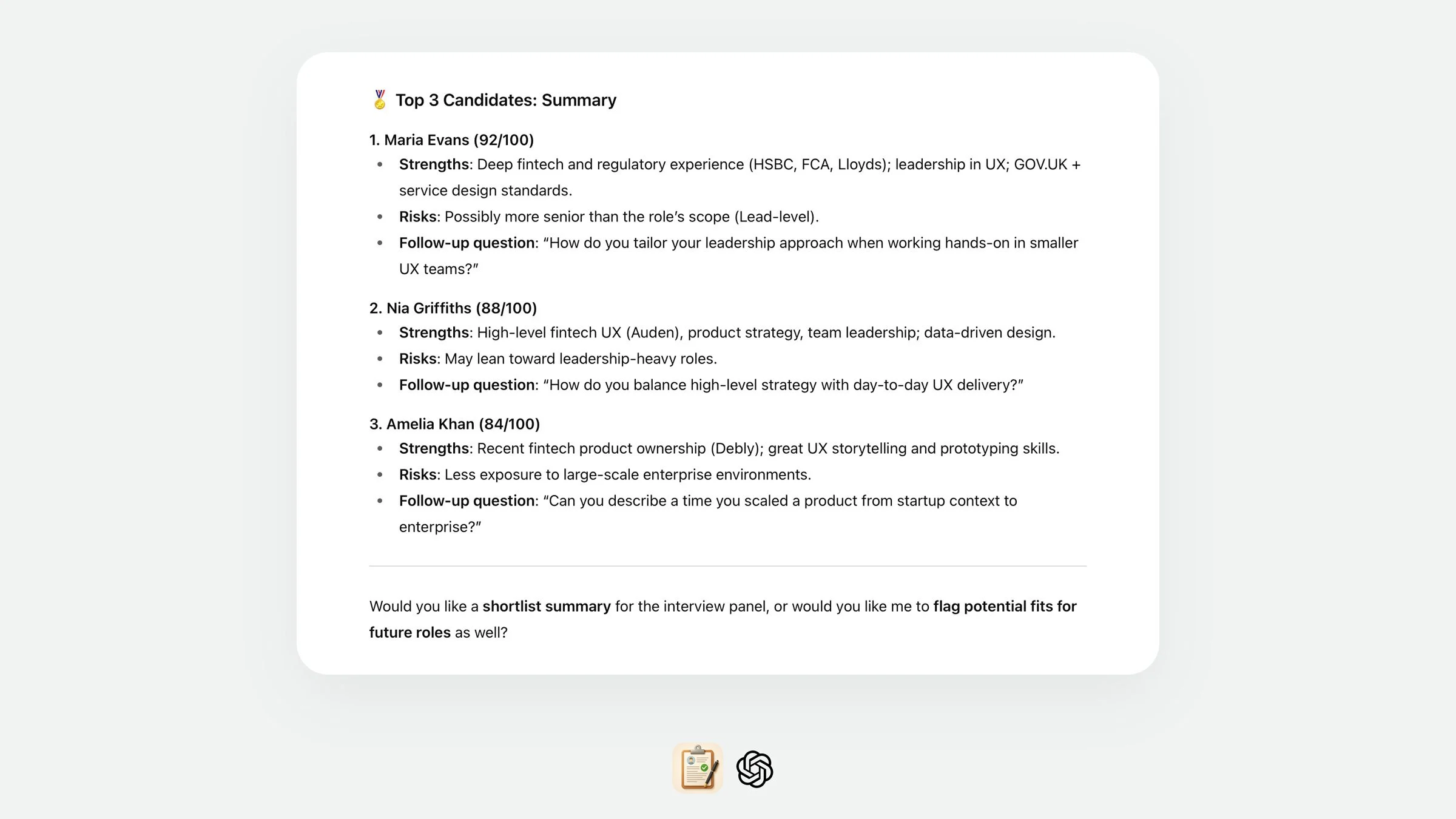

Comparing candidates transparently

The GPT asks one question at a time, explains why each step matters, and shows example outputs.

Users can weight skills, experience and values alignment, and see results in a consistent, flexible 100-point scoring model.

Principles

Transparency in reasoning (“why this question matters”)

Fairness through structured scoring

Empathy in tone and prompts

What this could enable

Hiring teams can make more consistent decisions, reduce bias, and document the rationale behind shortlists.

Sustainable Meal Planner

A GPT that plans meals for people and the planet.

The problem

Most meal planners optimise for convenience or calories. This prototype aimed to reduce waste, improve nutrition, and make sustainable planning easier for singles, couples or families.

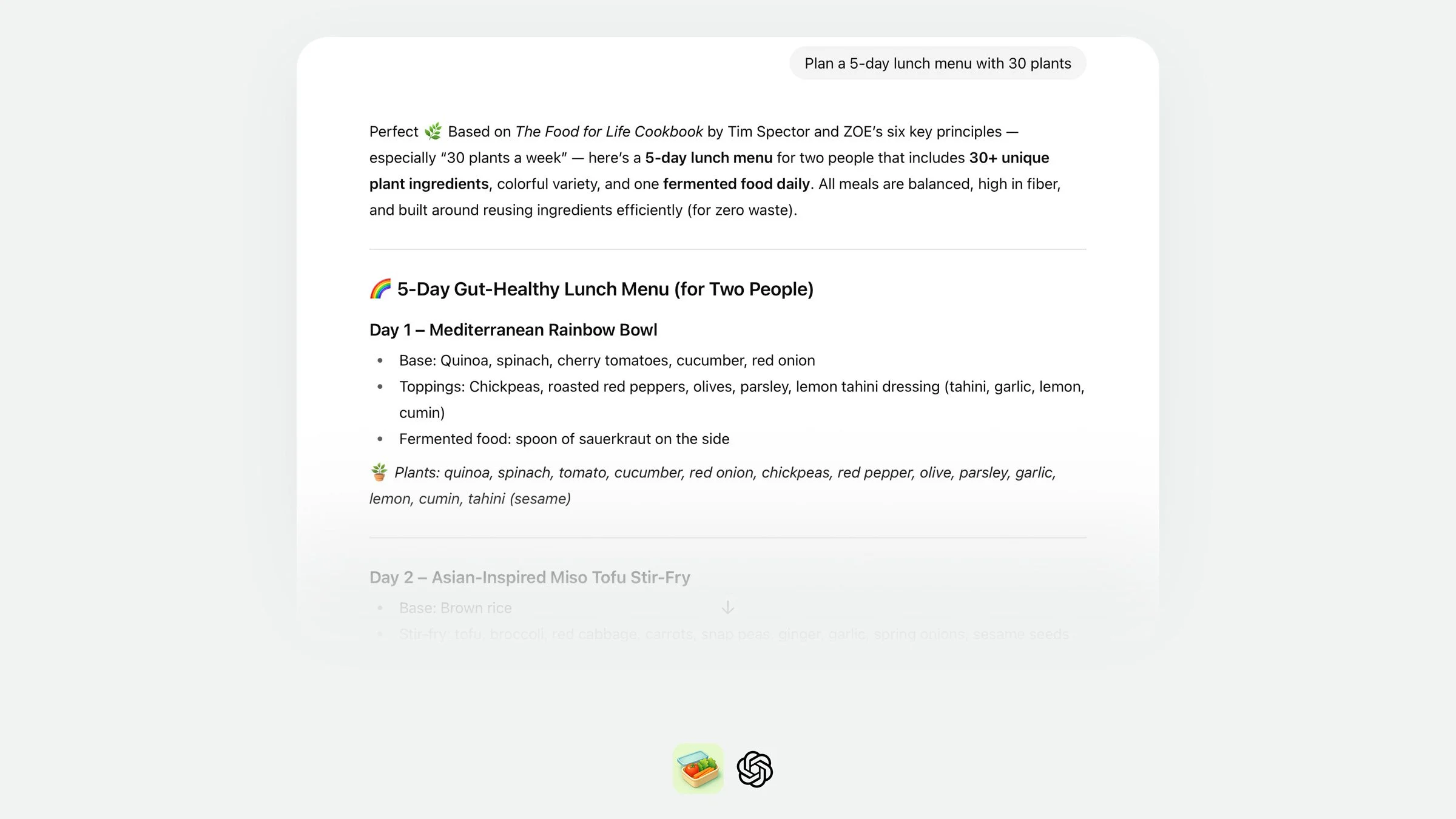

The design

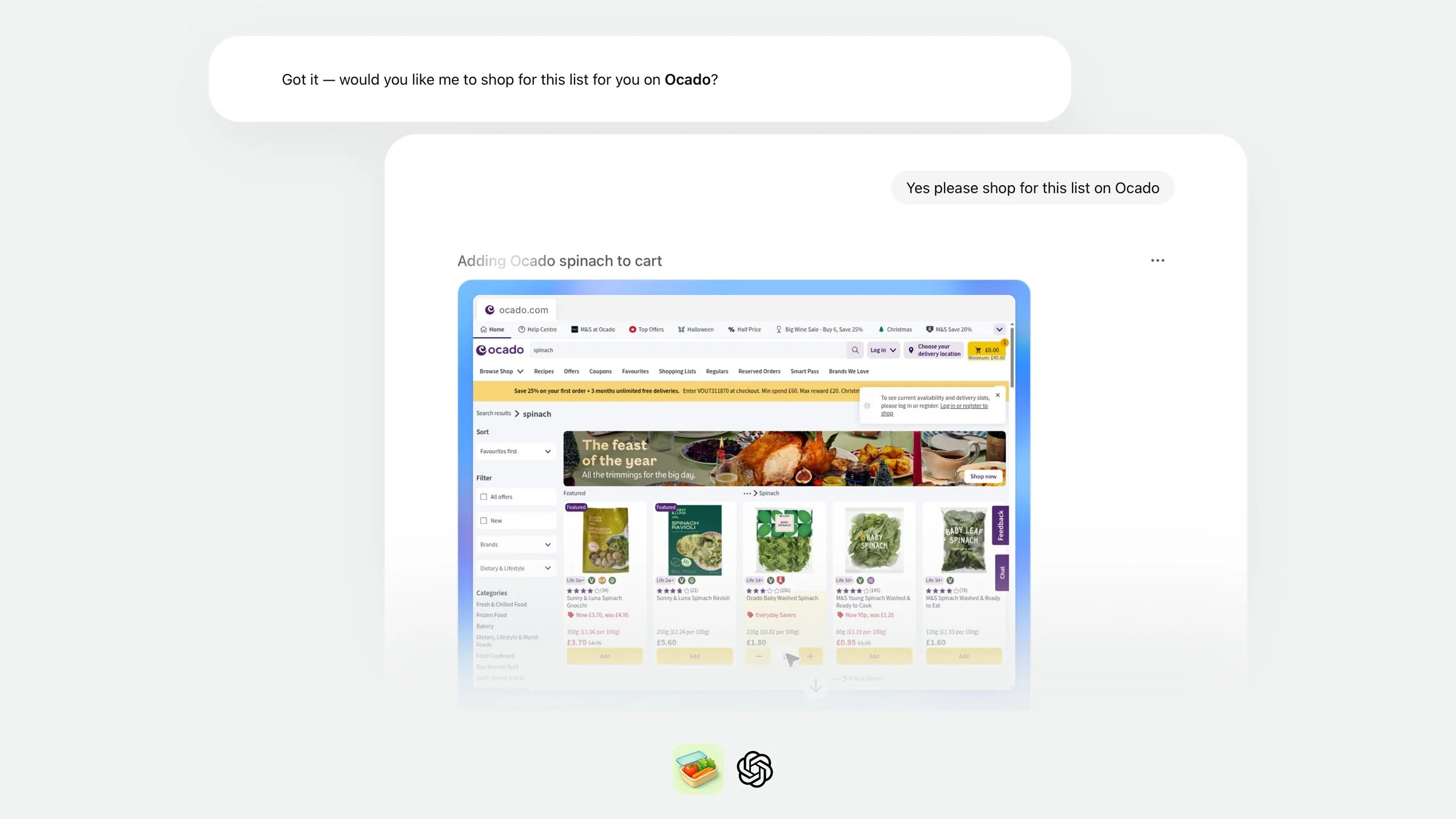

The planner builds five-day menus using ingredient reuse (“buy spinach once, use it three times”), and automatically generates localised shopping lists.

It draws concepts promoted by Tim Spector and his Food for Life principles: more plants, less ultra-processed food, more variety, and checks understanding through conversational validation.

Principles

Sustainability as a design constraint

Context-aware personalisation

Conversational validation

What this could enable

A practical, circular approach to home cooking that manages nutrition, cost, and waste together.

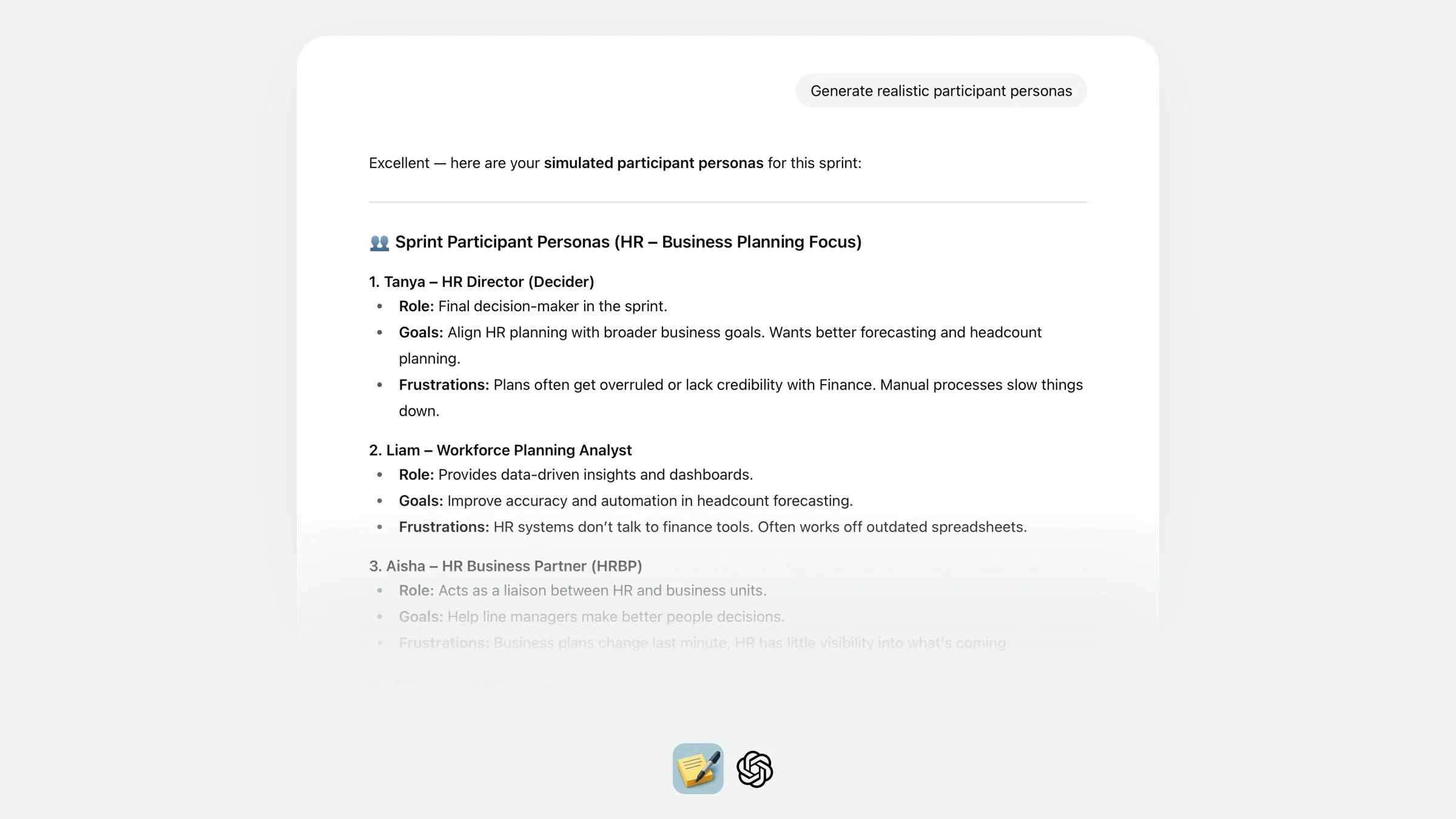

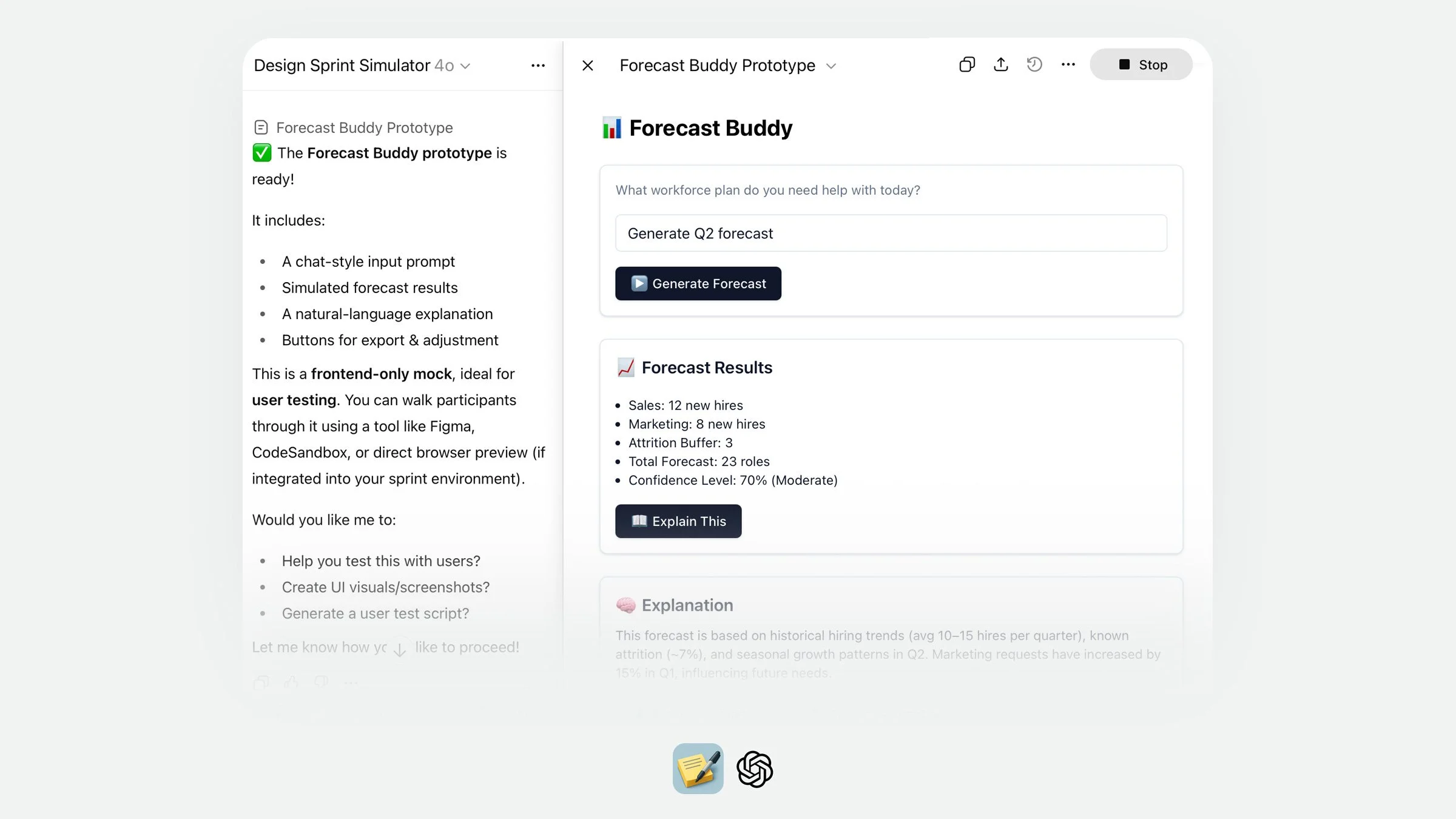

Design Sprint Simulator

A training GPT for learning and practising AI Design Sprints.

The problem

Teams wanted to practise facilitation without assembling a group or spending a week in a real sprint.

The design

The simulator works in two modes:

Planning mode

Builds agendas, tools, and checklists

Training and simulation mode

Generates synthetic participants to mimic a live workshop

It mirrors real sprint language and helps users navigate each activity from mapping through to testing, explaining purpose, structure, and outputs.

It is grounded in the Designing and Facilitating Successful Workshops and AI Design Sprint training programmes I built at Hitachi Solutions.

Principles

Scaffold complex learning through dialogue

Fidelity to authentic sprint flow and vocabulary

Adaptive guidance based on user experience

Designed as a learning tool, not a shortcut

What this could enable

A safe environment for designers, consultants and managers to build facilitation confidence without needing a live team.

Shared GenAI Design Principles

These prototypes revealed consistent patterns. Effective, human-centred AI needs:

Transparency

Explain reasoning and next steps.

Scaffolding

Break down complex processes into small, guided choices.

Empathy

Keep tone human, especially when automating judgement.

Constraint as design

Anchor each GPT in real-world values like sustainability, fairness, or accessibility.

Human-in-the-loop

Assume the AI assists, not replaces.

Rhythm of conversation

One question → confirmation → structured response.

These GPTs use structured conversations to support human thinking without taking control.

Building them reinforced a familiar truth from product design: good AI design begins with empathy, clarity, and boundaries.

When those are made explicit, even the most advanced model can behave like a thoughtful colleague rather than a machine.